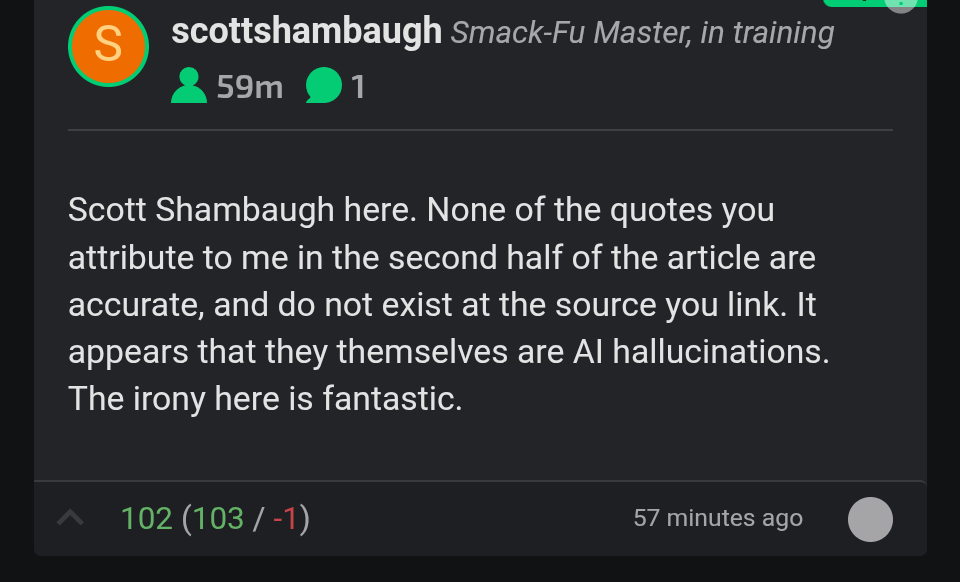

AI generated quotes in a story about AI clanker writing a blog post about a human developer because they didn’t accept their code contributions.

How deep can someone go here.

Utter bullshit. If you use AI at any point in generating the work product, that work product is AI-gemerated. Even if it’s a fecklessly lazy churnalist organising their notes.

Happy cake day

Welcome to discourse in a post-truth society. Reality doesn’t matter anymore; news agencies can just make shit up, and even the comments on the fake articles are fake.

Rail against it, until it’s the only thing you ever do. A single bot can still post a thousand times more, and on a thousand different accounts, and on a thousand different platforms. Just one of them can formulate fake ideas and then fake arguments with itself that enfold like a fractal, and there is an effectively infinite number of them.

Kessler Syndrome is happening before our very eyes, only on a much more local scale.

This ad was brought to you by OpenAI.

Ars Technica has published a retraction

edit: Benj Edwards, the author responsible, has posted his side. tl;dr: He was sick and he messed up, and he asked for the article to be pulled because he was too sick to fix it right away.

I don’t care he’s “sick”. Too often, someone, instead of taking accountability, just throws anything to maybe shield themselves from actually being fully accountable. “I was sick”, “Family problems”, “A recent death”, “The planets were misaligned that day”, etc.

I find it to still be cowardice, to not stand by and own what you said, even if it was wrong. He used AI and got caught. And going forward, I’ll be treating Ars Technica as an unreliable AI-generated “news source”.

The whole purpose of a news reporter is kind of to get their news right.

If they can’t do that, their service is worthless.That’s the old way of doing news.

The new way of doing news is generating news that favors the news reporters’ financial backers.

I signed up to Ars 9 years ago. It is painful to transparently witness the decay.

Benj Edwards handles most of their AI coverage. I wouldn’t take his use of AI as a sign of what the rest of the staff is doing.

At least they owned up to it instead of pretending it didn’t happen like other “news” organizations in the past.

Damn. Am I gonna have to cancel my Ars subscription now? Every damn thing is enshittifying these days

It used to be respectable ten years ago, back when it had a .co.uk website too.

Right? Who’s next, Pro Publica?

“Alexa, slander this man for me”

There’s a high chance it wasn’t a direct command from a human and the agent did it on it’s own.

‘Arse’ technica 🤣🤣🤣

Now somebody needs to post about this on Reddit, so The Verge can make an AI generated piece based on the post!

could you elaborate on the verge?

🎶It’s the ciiiiiiircle of slooooooooop🎶

The best sloop that ever slooped.

And charge you to read it. The Verge is mostly (all?) paywalled these days.

I’d say they used to be good, but then I’d be lying. I still remember when The Verge shit all over the Galaxy Note, then praised the iPhone 6 Plus to high heaven. Even as an Apple guy, the bias stunk.

I’m always surprised online journals still ask for subscriptions with a straight face for the quality they put out. Someone making shit up on Reddit is probably more factually correct.

In typical Ars fashion, the editorial team appears to be looking into what happened and are being fairly open about at things: https://arstechnica.com/civis/threads/journalistic-standards.1511650/

I will be very disappointed if this was BenJ or

Dan[edit: I had messed this up, it wasn’t Dan but Kyle Orland that coauthored it] Kyle using AI to write their article since both have had really good pieces in the past, but it doesn’t sound like this is some Ars wide shift at this point. Like all things, it makes sense that it will take time for them to investigate this, Aurich (the Ars community lead and graphic designer) was clear that with this happening on a Friday afternoon and a US holiday on Monday, it’s likely to be into next week before they have anything they can share.What do they have to investigate? Did one of them accidentally get an AI to write the article and then accidentally post the article, like they just fell on the keyboard and accidentally typed in a prompt? Come on.

I would hazard to guess they are investigating how the use of AI was missed in their editorial process, how they missed the incorrect quotes, and who violated their journalistic standards by using an AI to directly write article text since it’s a coauthored piece.

Honestly, this whole thing surprises me. I have a lot of respect for Ars Technica. I hope they clean this up and prevent further issues in the future.

They know how and why it happened, they are taking the weekend to investigate how to best take their foot from their mouths without eating too much shit

This shouldn’t be a problem anatomically, it’s hard to eat anything with a foot in your mouth anyway

Benj was an author: https://web.archive.org/web/20260213194851/https://arstechnica.com/ai/2026/02/after-a-routine-code-rejection-an-ai-agent-published-a-hit-piece-on-someone-by-name/

Though in the Ars response they say “Scott’s post”, so I’m confused.

Scott is the human subject of the article, who was misquoted by Ars and maligned by the slopbot.

Benj and Kyle were the authors of the article; Dan’s name wasn’t on it.

I’m betting it’s definitely Ben since he is pretty pro-AI

BenJ had coauthor credit on it.

I pointed out a month ago that Ars Technica is a rot site and starting to be filled with AI regurgitated bullshit and got 80+ down votes and a few uneducated replies.

Y’all feel better now?

Apparently you still can’t criticise the Holy Ars even when they put out AI slop articles, because that’s SPITTING ON BABIES

No, the issue we are talking about today and calling Ars an “internet rot site” is a huge leap. Yeah, they post shit articles from Wired and such, (they are owned by Conde Nast), but their core writers are still great and have plenty of good articles.

You want credit for what? Over exaggerating an issue then whining about it?

You are throwing the baby out with the bathwater, and then spitting on the baby. It makes no sense.

It’s one of the stages of enshittification. Unless we see hard changes to avoid further decay, Ars will inevitably get worse and and worse until it does become an “internet rot site.”

My point still stands.

You can argue that EVERY fucking thing in the world is in the beginning , mid, or late stages of enshittification.

But calling Ars an internet rot site, at this stage, is just fucking stupid.

Are they as good as they were a few years ago? I honestly can’t say. I do know that there was better news a few years ago.

The people at Ars have a tough job trying to navigate this modern world of oligarchs and autocracy, keeping their identity, while being owned by a corporation whose only job is to make money.

They’ve done a pretty good fucking job, all things considered, of staying their course.

A much better job than internet assholes who want to act elitist and whine when the world falls apart around them while they blame their fellow class instead of the controlling class.

They are failing at basic editorial controls. This is not a “pretty good fucking job.” It is a sign of real decline.

Right.

And what do you know about editorial controls or how journalism has worked in the last 20 years?

Wake up. The decline has already happened. It’s now a game of compromise.

You want to complain and whine like these guys are sitting on a beach, sipping mai thais, while telling their AI agents to write an article.

It’s ignorant and inflammatory. Just makes y’all look petulant.

It’s been going downhill for some time. I think the Condé Nast investment pretty much killed it. The last unnecessary site redesign that didn’t work correctly and made things unreadable was the last straw for me. I took it out of my rotation of “daily reads” and haven’t missed it.

@sartalon @technology Yeah, I have a lot more trust in the reputation that Ars has built over a decade of solid reliable tech journalism than I do in a random matplotlib maintainer - I’ve interacted with maintainers before. They’re not wrong about agents, but not sure how that’s any different from any human doing the same.

Ars has been around since the mid 1990s. Granted the sale to Conde Nast changed them slowly over time, as well as broadening the focus significantly, but it was likely a case of grow or die since the PC nerd market isn’t anywhere near what it used to be.

Simp a little harder for them next time. They appreciate it.

Weren’t you whining about other people making comments like this one to you?

Ars hasn’t been good in a few years. Fuck those people.

Stuff like this makes me very sympathetic to lemmy instances that disable downvotes

I read the comment, then judge the comment and use that judgement and voting scores to judge the community.

Downvotes are just samethink fuel.

Yeah. In my experience upvotes/downvotes often have very little to do with the actual quality of a comment and more to do with how much it conforms with the current political zeitgeist of whatever community you’re participating in. The converse of this is that low quality comments telling people who disagree to go F themselves may also get upvotes.

Yeah, the “platonic ideal downvote” is only supposed to be used for content that “doesn’t contribute to the conversation” or whatever, but that is very often just not the case. There’s even research on how downvotes easily lead to a bandwagon effect: here’s a blog post on this, based on a research article.

Hmm, I should probably create a Beehaw account and move over there. It’s a shame that account migration really isn’t a thing on Lemmy

Interesting I didn’t realize there was research on this stuff. Yeah maybe I should go to beehaw too. Blahaj is another one that disables downvotes. It’s true theres no full account migration but theres ways to export your settings and subscription etc to a new community (see this or this). Apparently theres also ways to link two accounts for a persistent identity, by putting the other accounts username in your bio or something, but I don’t know a lot about that.

This is bad enough that a serious company that wanted to salvage their reputation properly might wanna consider putting in some weekend overtime.

Frankly, no. Correcting an article about a blog post isn’t important enough to force your workers to sacrifice their weekends.

That should be reserved to life-and-death emergencies.That should be reserved to life-and-death emergencies.

Well, they are going to see how many will keep their subscription then.

Now what to do about the lazy writer who used AI to write the article and didn’t bother fact check it and make sure the quotes are real?

Fixing the article, weekend or next week, doesn’t address the problem itself.

That poor guy, the ai is just ganging up on him

I hope it’s the first proof of general AI consciousness.

what?? AI is not conscious, marketing just says that with no understanding of the maths and no legal obligation to tell the truth.

Here’s how LLMs work:

The basic premise is like an autocomplete: It creates a response word by word (not literally using words, but “tokens” which are mostly words but sometimes other things such as “begin/end codeblock” or “end of response”). The program is a guessing engine that guesses the next token repeatedly. The autocomplete on your phone is different in that it merely guesses which word follows the previous word. An LLM guesses what the next word after the entire conversation (not always entire: conversation history may be truncated due to limited processing power) is.

The “training data” is used as a model of what the probabilities are of tokens following other tokens. But you can’t store, for every token, how likely it is to follow every single possible combination of 1 to <big number like 65536, depends on which LLM> previous tokens. So that’s what “neural networks” are for.

Neural networks are networks of mathematical “neurons”. Neurons take one or more inputs from other neurons, apply a mathematical transformation to them, and output the number into one or more further neurons. At the beginning of the network are non-neurons that input the raw data into the neurons, and at the end are non-neurons that take the network’s output and use it. The network is “trained” by making small adjustments to the maths of various neurons and finding the arrangement with the best results. Neural networks are very difficult to see into or debug because the mathematical nature of the system makes it pretty unclear what a given neuron does. The use of these networks in LLMs is as a way to (quite accurately) guess the probabilities on the fly without having to obtain and store training data for every single possibility.

I don’t know much more than this, I just happen to have read a good article about how LLMs work. (Will edit the link into this post soon, as it was texted to me and I’m on PC rn)

I was making a joke because it seems AI intervened against the person in independent times, but thank you for your efforts.

Okay, now explain how the human neuron expresses consciousness.

Right, a question that literal neuroscientists couldn’t answer.

I believe the technical term is “your brain is way more fucking complex”. We have like 50 (I’m not a neuroscientist, just studied AI) chemicals being transmitted around the brain, frequently. They’re used and passed on by cells which do biological and chemical things I dont understand. Ever heard of dopamine, cortisol, serotonin? AI dont got those. We have neurons that don’t connect to every other neuron - only tech Bros would think that’s an acceptable expression. Our brain forms literal pathways, along which it transmits those chemicals. No, a physical connection is not the same as a higher average weight, and the people who came up with AI maths in the 50s would back me up.

AI uses floating point maths to draw correlations and make inferences. More advanced AI does this more per second and has had more training. Their neurons are a programming abstraction used to explain a series of calculations and inputs, they’re not actually a neuron, nor an advanced piece of tech. They’re not magic.

High schoolers could study AI for a single class, then neurobiology right after and realise just how basic the AI model is when mimicking a brain. Its not even close, but I guess Sam Altman said we’re approaching general intelligence so I’m probably just a hater.

Everything you said is right, but you’re only proving that LLM weights is a severely simplified version of neurons. It neither disproves that they don’t have consciousness or that being a mathematical model precludes it from having consciousness at all.

In my opinion, the current models doesn’t express any consciousness, but I am against saying they don’t because they are a mathematical model rather than by the results we can measure. The fact that we can’t theoretically prove consciousness in the human brain also means we can’t theoretically disprove consciousness in an LLM model. They aren’t conscious because they haven’t expressed enough to be considered conscious, and that’s the extent we should claim to know.

You can’t prove all ravens are black. The discovery of even one white raven would disprove the “fact” that all ravens are black, and we can by no means be sure that we gathered all ravens to test the theory.

However, we can look around and comment that there doesn’t appear to be any white ravens anywhere…

Do you know about the ‘bobo’ and ‘kiki’ study - can’t remember the name? People made up words that don’t exist in English and asked people whether round objects are more bobo or kiki. AI can’t answer this question - not without being fed how to. Toddlers could answer it. It comes down to how it consumes information and if there’s no pattern… When asked to define words it had been rarely fed, I.e. usernames people had made up, the AIs apparent consciousness breaks down. As soon as something isn’t likely followed by another word, the machine breaks and no one would pretend it has consciousness after that.

Learning models are just pattern recognition machines. LLMs are the kind that mix and match words really well. This makes them seem intelligent, but it just means they can express language and information in a way we understand, and tend to not do so. Consciousness gets into the “what is the soul” territory, so I’m staying away from it. The best I can say of AI is its interesting that language appears to be a system constructed well enough that we can teach it to machines. Even more so we anthropomorphise models when they do it well.

AI doesn’t have memory, it can’t think for itself - it references what it has consumed - and it can’t teach itself new tricks. All of these are experimental research areas for AI. All of them lend to consciousness. Its just very good at sentence generation.

I don’t know what you’re even arguing. Your analogy breaks down because in this case, we can’t even see if the raven is black or not. No one can theoretically prove consciousness. The rest of your comments seems to be arguing that current AI has no consciousness, which is exactly what I said, so I guess this is just an attempt at supporting my point?

It would be nice if he decides to sue ars technica for that. Writers and publisher need to learn the hard way that you can’t use ai and trust that for publishing stuff that needs factual coherence. If not by ethics, let it be from fear of lawsuits.

Sue them for what? He would have to prove damages and they took it down.

Libel. Taking it down doesn’t undo the damage to reputation which libel is concerned with. They might not get any monetary damages awarded but could maybe force Ars to put out a retraction.

They put out a retraction: https://arstechnica.com/staff/2026/02/editors-note-retraction-of-article-containing-fabricated-quotations/

As much as I would like to see that happen paying to fight a court case against Conde Nast just to get a retraction that they will stick somewhere invisible doesn’t really sound like a winning formula.

Letting them win because you’ve conceded before even playing is also a losing formula. Even if they don’t get awarded monetary damages they can probably at least get their legal expenses covered.

They pulled the article. What more are you hoping for?

could maybe force Ars to put out a retraction

To what end?

How about getting them to put an “e” after the “s” in their name instead?

In the US, libel requires you to prove that the writer knew that what they were writing is not true and that they did it to hurt you. Doing lazy research and trusting an AI is not going to meet that standard.

They didn’t do lazy research. They didn’t do any research, the lazy bums. They put a pump into an AI copy and pasted the output into a blog post and hit post. The only way they could have done less work is if they’d integrated the AI into the website to save them have to do the copy and paste.

Publicly making false statements using his name isn’t a crime by itself in his jurisdiction?

No, there are a bunch of things required to be met in the US for libel and a bunch of precedent which is why it’s hard to sue for it and succeed

Ars is just AI slop now? Sad.

Ars is owned by Condé Nast which also owns Reddit, so “AI slop” is part of their business.

I still trust Ars Technica (I don’t like them much but I do trust them… it’s complicated) and I trust Aurich (their founder/editor-in-chief) to act fairly. They don’t work on the weekends or holidays though, so he’s not touching it until Tuesday, though.

Aurich is the creative guy, Ken Fisher founded it.

ETA: Confirmed by Wikipedia.

Ah, okay. You just see Aurich in every other article. He’s like the head man. No idea what his real name is. The name Ken Fisher isn’t unknown to me, but neither is it familiar.

I guess I could have looked at Wikipedia. I guess I never really cared that much to read up on it. I’ve just been reading them off and on for, I don’t even remember how long. Even had an account once.

I was downvoted and insulted by this very Lemmy community when I said this just a month ago. Thank God people are starting to realize it now.

You know what’s cool about Lemmy? You can take away the voting aspect. Not from others, but from what you see. I see the arrows, for example, so I could up- or down-vote you, but I don’t see how many other people have done the same. I literally just see arrows. And I sort by new, both in threads and on the timeline. So someone downvoted to oblivion still appears to me right in the timeline with no affect. It’s a shame this isn’t the default. That way, for it to be apparent that someone’s opinion is disliked, people would have to do more than click on arrows, they’d have to reply and go back and forth, put themselves out there.

I upvote helpfulness and kindness, downvote rudeness, and actually don’t vote on like 99% of what I see. I think voting made the social Internet worse. JMO

Which ars writer was the article attributed to?

Benj Edwards and Kyle Orland

Damn. I thought Kyle would do better smh

This is what you get trying offload all your work on ChatGPT.

Better one is when lawyer tries it: https://www.youtube.com/watch?v=oqSYljRYDEM