- cross-posted to:

- technology@lemmy.world

- cross-posted to:

- technology@lemmy.world

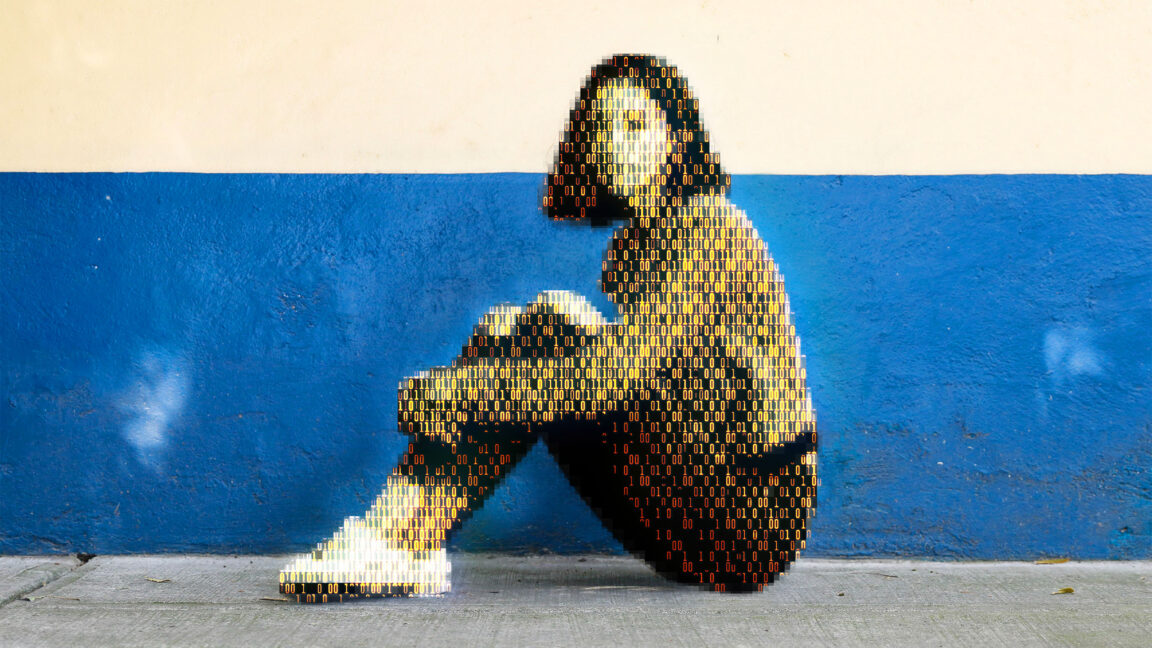

Today, a prominent child safety organization, Thorn, in partnership with a leading cloud-based AI solutions provider, Hive, announced the release of an AI model designed to flag unknown CSAM at upload. It’s the earliest AI technology striving to expose unreported CSAM at scale.

This is a great development, albeit with a lot of soul crushing development behind it I assume. People who have to look at CSAM or whatever the acronym is have a miserable job, so I’m very supportive of trying to automate that away from people.

Yeah, I’m happy for AI to take this particular horrifying job from us. Chances are it will be overtuned (too strict), but if there’s a reasonable appeals process I could see it saving a lot of people the trauma of having to regularly view the worst humanity has to offer without major drawbacks.